E-commerce Website

During my time with Rice App's OSA in the summer of '23, my team and I developed a project called Owl Mart. This platform was designed to streamline the buying and selling process on campus. As it was a newly introduced project, there was no pre-existing codebase, and we built everything from scratch.

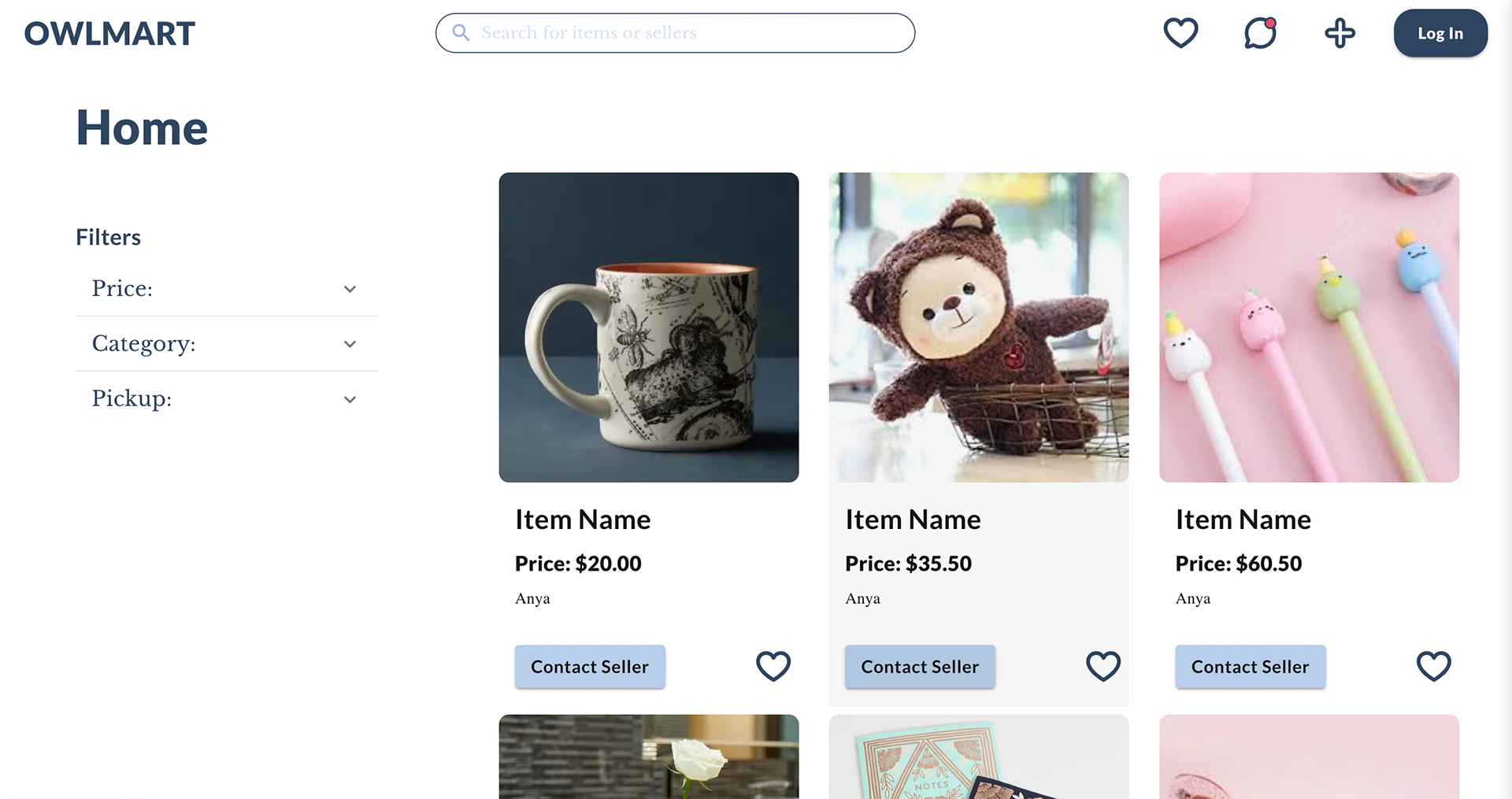

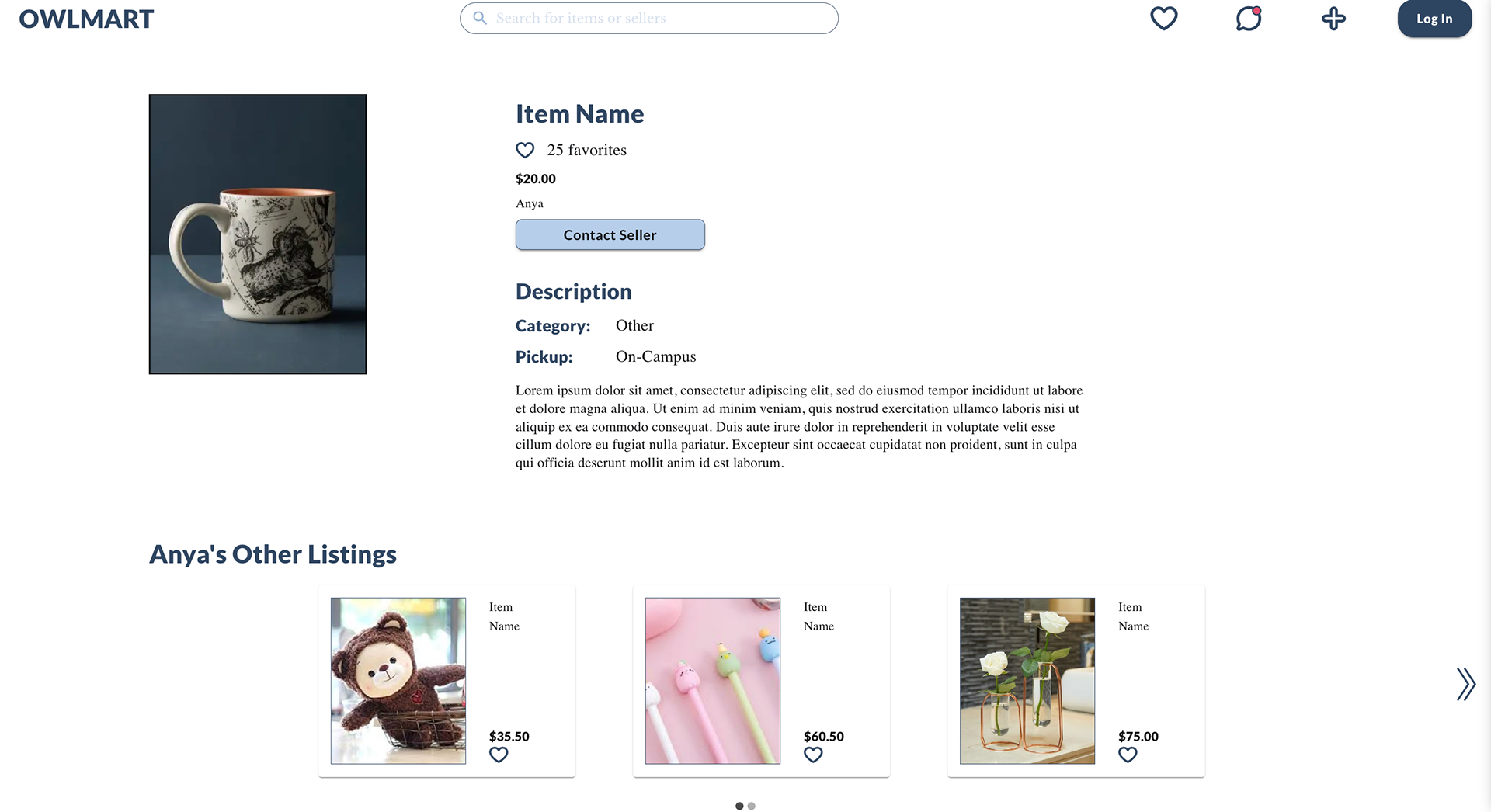

I was responsible for implementing the Home/Favorites page, Navbar, and the specific items page using ReactJS for the front end, with Material UI as the primary library for UI components.

On the Home and Favorites page, I made each product card clickable, directing the user to a detailed item page (using React-Router) that contains comprehensive information about the product. This detailed item page also features a carousel displaying other listings from the same seller, with an automatic exclusion of the currently viewed item.

In addition to the front-end tasks, I also employed GraphQL and Apollo Server for building the backend infrastructure, creating the update listing mutations and user query resolvers.

Lastly, I also learned Git and React web file management/structure.

Classify Handwritten Digits from the MNIST Dataset

Just a snippet of the full code!

I used a neural network in the form of a sequential model from the Keras library to classify handwritten digits from the MNIST data set. I ended up getting a >90% accuracy rate using 3 layers in the neural network and 5 epochs but ended up learning more about neural networks in the process.

In the process, I learned more about different activation functions and optimizers and loss functions.

I also intentionally overfit the data on another model in order to learn regularization techniques. In this model, I tested techniques such as early stopping, dropout, and L2 regularization in order to prevent overhitting and learn more about the behaviors of each technique.

Unsupervised Learning with Autoencoders

Autoencoder successfully reconstructed the inputted test images

Given the MNIST Fashion Dataset, I trained an autoencoder to learn more about unsupervised learning using the Keras library.

I trained the autoencoder with 10 epochs and a mean-squared error loss function to achieve less than a 1% loss between the input and the output of the training data. After training the autoencoder, I ran the test data through the autoencoder to see how well it dealt with reconstructing noisy images. The results are shown.

Ultimately, I am satisfied with the result with well the autoencoder was trained.